Use the update-environment command in the AWS Command Line Interface (AWS CLI) to disable autoscaling by setting the minimum and maximum number of workers to be the same.

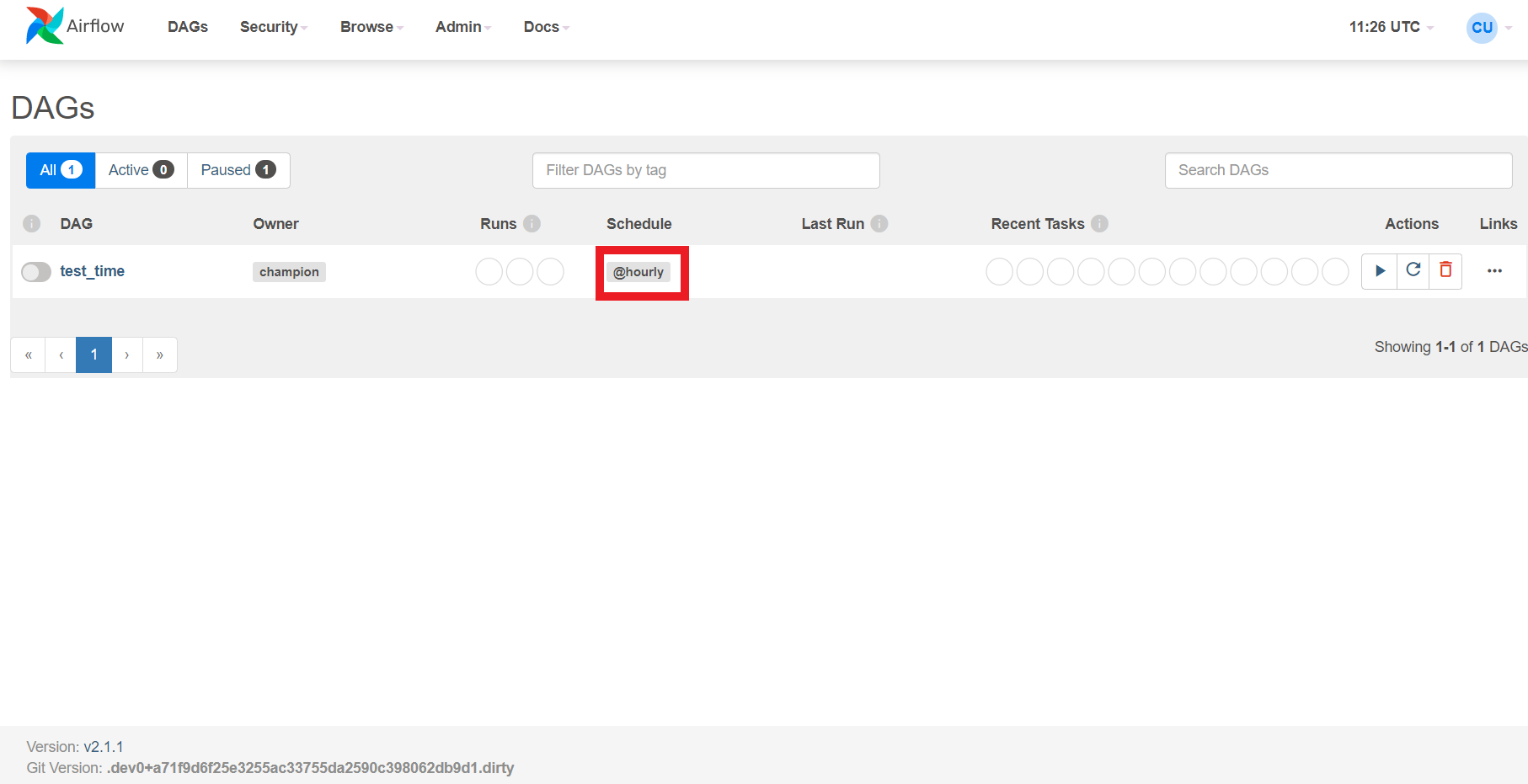

You can also set the minimum workers equal to the maximum workers on your environment, effectively disabling autoscaling. Another option is to adjust the timing of your DAGs and tasks to ensure that that these scenarios don't occur. We recommend increasing the minimum number of workers on your environment. Some of the tasks being queued may result with the workers in the process of being removed, and will end when the container is deleted. In the second scenario, it removes the additional workers. If there is a brief moment where 1) the current tasks exceed current environment capacity, followed by 2) a few minutes of no tasks executing or being queued, then 3) new tasks being queued.Īmazon MWAA autoscaling reacts to the first scenario by adding additional workers. This can occur for the following reasons: This will set the complete airflow installation to schedule based on Amsterdam times. For example for Amsterdam it would be: core defaulttimezone Europe/Amsterdam. There may be tasks being deleted mid-execution that appear as task logs which stop with no further indication in Apache Airflow. In your airflow.cfg you can define what the scheduling timezone is. The Airflow scheduler monitors all tasks and DAGs, then triggers the task instances once their dependencies are complete. You can use the update-environment command in the AWS Command Line Interface (AWS CLI) to change the minimum or maximum number of Workers that run on your environment.Īws mwaa update-environment -name MyEnvironmentName -min-workers 2 -max-workers 10 Scheduled tasks will still show up as failed in GUI and in database. Restart Airflow scheduler, copy DAGs back to folders and wait. If there are a large number of tasks that were queued before autoscaling has had time to detect and deploy additional workers, we recommend staggering task deployment and/or increasing the minimum Apache Airflow Workers. Steps undertaken: Stop Airflow scheduler, delete all DAGs and their logs from folders and in database. Furthermore, when a task has dependsonpastTrue this will cause the DAG to completely lock as no future runs can be created. If there are more tasks to run than an environment has the capacity to run, we recommend reducing the number of tasks that your DAGs run concurrently, and/or increasing the minimum Apache Airflow Workers. Weve been experiencing the same issues (Airflow 2.0.2, 2x schedulers, MySQL 8). If there are more tasks to run than the environment has the capacity to run, and/or a large number of tasks that were queued before autoscaling has time to detect the tasks and deploy additional Workers. This often appears as a large-and growing-number of tasks in the "None" state, or as a large number in Queued Tasks and/or Tasks Pending in CloudWatch. There may be a large number of tasks in the queue. To learn more about the best practices we recommend to tune the performance of your environment, see Performance tuning for Apache Airflow on Amazon MWAA. Later versions of the awaitility library do not work for this test, so you have to.

There are other ways to optimize Apache Airflow configurations which are outside the scope of this guide. To start from scratch, move on to Starting with Spring Initializr. This leads to large Total Parse Time in CloudWatch Metrics or long DAG processing times in CloudWatch Logs. If you're using greater than 50% of your environment's capacity you may start overwhelming the Apache Airflow Scheduler.

#Airflow scheduler not starting update#

Reduce the number of DAGs and perform an update of the environment (such as changing a log level) to force a reset.Īirflow parses DAGs whether they are enabled or not. Sql_alchemy_conn = I tried to start my webserver using it shows ttou signal handling and existing worker.There may be a large number of DAGs defined. # The home folder for airflow, default is ~/airflow When I tried to start my webserver it couldn't able to start. If the scheduler is not running, it might be due to a number of factors such as dependency installation failures 3, or. I have configured airflow with mysql metadb with local executer. I have installed airflow via github source.

0 kommentar(er)

0 kommentar(er)